My paper was proved wrong. After a sleepless night, here’s what I did next

Two juvenile lynx (Lynx lynx) photographed by a camera trap. Data on individual animals can be combined with species occurrence data, but a flawed statistical model could lead to an underestimation of population size.Credit: KORA

As a statistician with 20 years of experience in the field of ecology, I recently faced a challenging moment. In August, some colleagues in Canada published a response1 to a paper that I co-wrote a decade ago, showing that the method my co-authors and I proposed back then is fundamentally flawed.

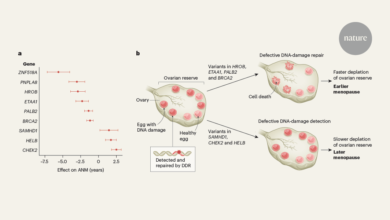

The method in question is a statistical model2 that combines several sources of data on individual animals, as well as species-level data, to improve the estimation of animal abundance in a particular environment. This is important, because having a reliable estimate of abundance is crucial for guiding management efforts to protect at-risk species, setting hunting quotas and regulating invasive species. Incorrect or biased estimates of abundance could lead to a waste of resources and badly informed management strategies.

For instance, imagine you’re managing a hunting reserve that’s home to exactly 1,000 game birds. If a hunting quota is set at 20% of the total population, but a model overestimates the population by 10%, putting it at 1,100 birds, you’ve just signed off on the hunting of 20 more birds than you should have done.

Cash for errors: project offers bounty for spotting mistakes in published papers

Jack Thomas at the University of Victoria, Canada, Simon Bonner at Western University in London, Canada, and Laura Cowen, also at Victoria, have shown that the model that we developed does the opposite of that: it systematically underestimates abundance. The main reason for this is that we did not account for how animals occupy space during a survey. If animals move slowly or have small home ranges, they might be detected at only one site per sampling period, leading to an underestimation of their true abundance. Conversely, if they move quickly or have large ranges, they could be detected at multiple sites, potentially skewing the data. Laetitia Blanc, the first author of our paper, was a PhD candidate at that time but she has since left academia to become a secondary-school teacher. She had nothing to do with the flaw in the method, and nor did my co-authors. As the statistician of our group, as well as the senior author, I see this as my mistake and mine alone.

Nightmare timing

I got the news of our paper being debunked late at night, just as I was about to go to sleep. Fridolin Zimmermann, a wildlife biologist at the KORA conservation foundation in Ittigen, Switzerland, and a co-author of our paper, shared a link to the new response to our work over e-mail, and at first I attempted to ignore it. I tried to go to sleep, but I couldn’t. I got up, opened my laptop and started to read. And I realized pretty quickly that the authors were right about our mistakes.

I went through a heady mix of emotions and asked myself a long series of late-night questions. Why didn’t I see the problem? What should I tell my colleagues? Has anyone actually used the model to inform conservation strategies? What if all the other ideas I had and will have are broken, too? What’s the community going to think of me?

In hope of a catharsis, I’ve decided to share how I responded to the experience.

How can I publish open access when I can’t afford the fees?

My first action was to e-mail the authors of the response paper, and congratulate them on their work. I also shared my surprise at not having been notified before the publication of the paper. They did apologize for forgetting to put me in the loop. No hard feelings: I appreciate the authors for identifying our mistakes and taking the time to explain and address them in a paper. They’ve corrected the scientific record, and for that I’m deeply grateful.

My second action was to publicize the new paper, and what it meant for our previous work, in a short thread on X. The feedback from the ecology community has been positive, which has been a huge relief, and has made me feel good about us as a collective of people. My co-authors have also been very supportive.

Here’s some advice I have for others who find themselves in similar circumstances.

Don’t take it (too) personally

There is a real difficulty in responding to such a situation, and this challenge is probably greater for early-career researchers than for senior ones. I think the key is not to take it personally. Now that my career is established, this is much easier for me, because I have already experienced failures, and I have accomplishments that make up for them.

Twenty years ago, when I had just finished my PhD, I would have taken a moment such as this much more personally. I wish I had realized sooner, as a young researcher, that the most important thing to establish early in your career — and to continually reassess as you grow, both personally and professionally — is a balance between work and personal life. It’s so easy to get swallowed up by your work when it’s something you love to do, or simply because you’re caught up in the pressure to succeed or get a permanent position.

A mistake in science is bothersome, but, at the end of the day, it’s just part of work: retaining a sense of perspective makes setbacks more manageable. I am grateful to be surrounded by a community of colleagues whom I can talk to, especially when experiencing mistakes. Overall, I think that if we, as researchers, start sharing our failures more openly, it’ll be easier for us to tackle them together, as a community. That way, we can fix things without blaming anyone.

All in for open and reproducible science

In our original paper, my co-authors and I made the code available, which allowed our colleagues to reproduce our (flawed) results. This emphasizes the importance of making research reproducible and open. Usually, I work with a combination of the Markdown programming language in R (R Markdown) to write text, including equations via Latex, and analyse data in a single reproducible document. I also use Git/GitHub to track code changes, often in collaboration with colleagues, and share my GitHub code in the final paper.

It’s a relief, in the end, that despite us sharing the code, the method hasn’t been used other than to disprove it. One thing we could have done to spot this issue earlier — and something that the response paper used — is simulations. Usually, researchers fit a statistical model to real data to estimate the model’s parameters. In simulations, things are flipped around: the parameters are set first, and the model is then used to generate fake data.

This lets researchers see how their model performs under various conditions, even when its assumptions are not met. In other words, it gives them an opportunity to sense-check a model: if they use it to generate data, they should end up with parameter estimates that are pretty close to the values they started with. This approach is more common now, including in statistical ecology, than it was when my co-authors and I published our 2014 paper.

Science works in increments

There is much value in the sequence of events that I’ve been involved in. A paper is published, and is followed by a response and sometimes a rejoinder. This is how science should operate: incrementally, by invalidating hypotheses or methods, whether in a single paper or across various papers, as researchers move slowly towards a deeper and more complete understanding of the world.

This approach highlights the iterative, self-correcting nature of science.

What I learnt from running a coding bootcamp

However, the business of publishing papers has become ill-suited to this ideal. Despite being crucial for the way in which science is disseminated, the act of reviewing papers is undervalued in research careers. And the act of correcting or retracting a paper can be reputationally damaging and personally embarrassing: often, these notices are associated with fraud or deception, rather than being seen as a sign of healthy scientific progress. Moreover, response papers and commentaries often lack the recognition and visibility that they deserve.

To bridge the gap between current publishing practices and the true purpose of scientific inquiry, several changes are necessary. First, in my view, we should elevate the role of peer review, recognizing its importance in maintaining the integrity of science. This might involve introducing incentives, such as valuing reviews as scientific contributions, incorporating them into tenure and promotion criteria, or even providing financial compensation. Second, we must shift the perception of paper corrections and retractions, viewing them as essential components of scientific progress rather than as signs of failure. Journals should promote dialogue between authors, and editors could make the whole process easier by routinely suggesting that authors write rejoinders, and by relaxing the constraints on length and time-to-submit.

I’d suggest to other scientists who find themselves in a similar situation that they take proactive steps to advocate for these changes — whether through editorial boards, professional societies or in their own institutions — to help realign the publishing process with the true spirit of scientific discovery.

Science is a human endeavour

Making mistakes is a fundamental part of being human, and because science is conducted by human, mistakes happen as lines of research are pursued. However, this aspect of science is rarely emphasized; we don’t often like to acknowledge our imperfections.

Embracing our mistakes is crucial for personal and professional growth. In fact, we should go further and showcase our mistakes to our students and the public. It might help to repair the current crisis of confidence in science. One way is through a CV of failures, or a shadow CV. I guess I need to add another line to mine.